September 2025

Healthcare is one of the most data-rich and capital-intensive sectors yet remains decades behind in analytics. High-stakes decisions rely on incomplete data and systems that are slow, manual, error-prone, and expensive. The result is billion-dollar missteps, extended timelines in an industry already operating on decade-long horizons, and delays in bringing life-saving medicines to patients.

Despite rapid advances in LLMs, these breakthroughs have yet to meaningfully change how strategic decisions are made in biotech. Today’s LLMs are fluent language tools. They can summarize dense papers, extract entities, or polish prose, but language is not the same as evidence-backed strategy.

Biotech demands reasoning across fragmented, multi-modal data: trials, patient outcomes, regulatory precedent, and competitive context, all shifting in real time.

Addressing this call for platforms that are purpose-built for biotech, fluent in the networked language of biology, and capable of turning vast, messy evidence into actionable, trusted insights. Only with tools like these will strategic decisions in biotech and healthcare match the rigor the field demands.

In this whitepaper, we (1) examine how strategic decisions in biotech are made today, (2) show where today’s LLMs help and where they fail, (3) propose a bio-native architecture built on a shared data foundation, multi-hop reasoning, and UX-driven validation loops, and (4) introduce LABI, Luma Group’s AI for Biotech Intelligence, and how we aim to apply these principles in practice.

How Are Strategic Decisions Made in Biotech Today?

Consider a typical diligence scenario to evaluate a new therapeutic candidate. You ask an AI system: “What is the current standard of care in this disease, and how does this candidate compare on endpoints, patient selection, safety, and competitive position? Pull the underlying trials, relevant patient datasets, regulatory precedents, and commercial context, and return it as a citation-backed memo with tables.”

It is a compelling vision: a single click yielding evidence-backed answers that can be trusted. But, when pushed with inquiries like this, today’s LLMs fall short. Results are often incomplete and inaccurate, with hallucinations that make them unfit for high-stakes strategic decisions.

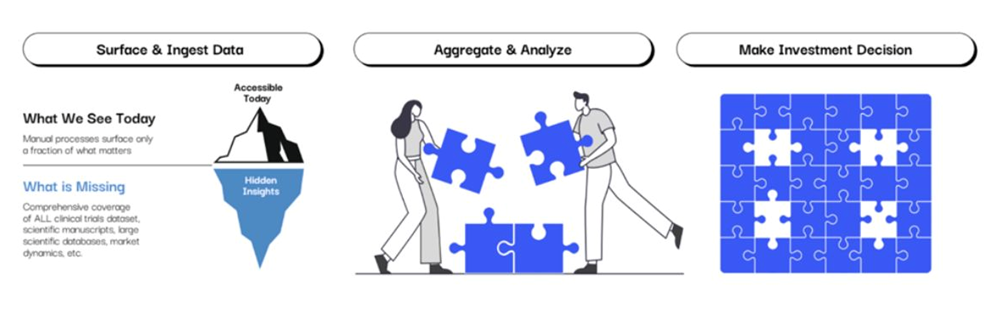

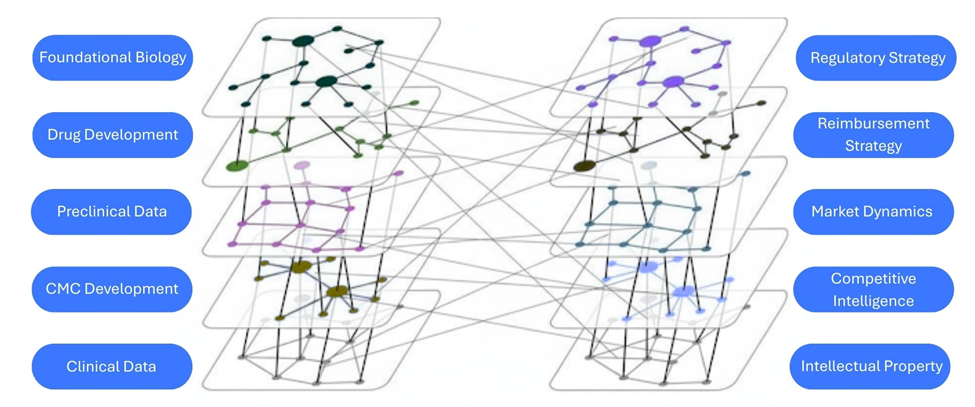

Beyond these limits, the diligence process in biotech is uniquely complex and fundamentally different from generalist investing. It requires deep, cross-functional expertise across domains such as foundational biology, discovery and development, clinical data, regulatory precedent, reimbursement strategy, and commercial dynamics. Each domain is a fragmented and hard-to-reach pool of millions of data points that are still largely hunted down and tracked manually. Connecting them into a coherent view requires months of labor-intensive and error-prone work. Moreover, the landscape is constantly shifting. As the right information surfaces, connections emerge both within and across domains, which then need to be tested against multiple possible outcomes. A failure in a novel class, for example, can cascade across development, commercial potential, and competitor pipelines, inducing a rippling effect across domains.

To address these complexities, the industry still takes a piecemeal approach: scaling by headcount, subscribing to multiple data platforms, augmenting existing workflows with generic AI, and tracking and pulling data into manual workflows. As a result, high-stakes biotech decisions are made with fragmented, incomplete data, through manual, error-prone processes that risk costly missteps.

Figure 1: Biotech decision-making today: High-stakes biotech decisions are made with fragmented, incomplete data, through manual, error-prone processes that risk costly missteps.

AI’s Impact and Limitations in Biotech Decision Making

The real constraints on AI in biotech are not computational power or algorithms but rather data and complexity.

Existing LLM successes hit complexity walls: Even where LLMs work well, they struggle with complexity. Code writing AI has immediate feedback when code compiles or breaks but fails with large codebases. Travel planning LLMs can pull from standardized databases but fail when preferences conflict or constraints shift. These examples show that AI breaks down when reasoning must span interconnected systems, even with built-in advantages like immediate feedback and standardized data.

Biology uniquely amplifies these challenges: Biotech faces the same complexity scaling problems without any structural advantages. The heterogeneity of biological data creates fundamental problems. Clinical trials for identical indications might report results in completely different formats, representing disagreements about what constitutes meaningful measurement in biological systems. Reasoning becomes exponentially more complex when drug interactions, competitive landscapes, protein networks, and patient populations are layered on.

Figure 2: Complexity and network effects in biotech: Biotech complexity emerges from connections: foundational biology to clinical data, regulatory strategy to reimbursement, market dynamics to competitive intelligence. Each layer interlocks, creating numerous reasoning challenges.

User experience solutions remain unexplored: Other domains experiment across the full spectrum from autocomplete to autonomous agents, yet even well-resourced teams are pivoting back to human-in-the-loop systems for complex tasks. Biotech has barely explored beyond basic autocomplete. This represents an opportunity: biotech can learn from experimentation in other domains and design purpose-built solutions for its unique data structures and reasoning requirements.

Building a Bio-Native Intelligence Platform

Building AI systems that can reliably reason across interconnected biotech datasets requires three fundamental design principles:

Get the data in one place, and one language: Before clever prompts, we need a common evidence layer of disparate data pulled into a standard format with clear labels. Today, the facts live in a hundred sources, from registries to PDFs to supplements to figure panels, and none of the sources describe their findings the same way. While industries like travel planning have structured data and flight or hotel aggregators, biology lacks both standardization and aggregation.

LLMs should read messy sources, suggest mappings, line up fields that mean the same thing, and flag conflicts for a human to review, but the shared format and hub must come first. As data lands in this structure, simple checks keep things comparable: units and scales match, time frames line up, patient groups are matched, and endpoints mean the same thing. Every fact keeps its source, date, and version, so teams can trace provenance and monitor changes. And should be built in a format that is graph-native so platforms can follow and justify links and support the networked reasoning biology requires.

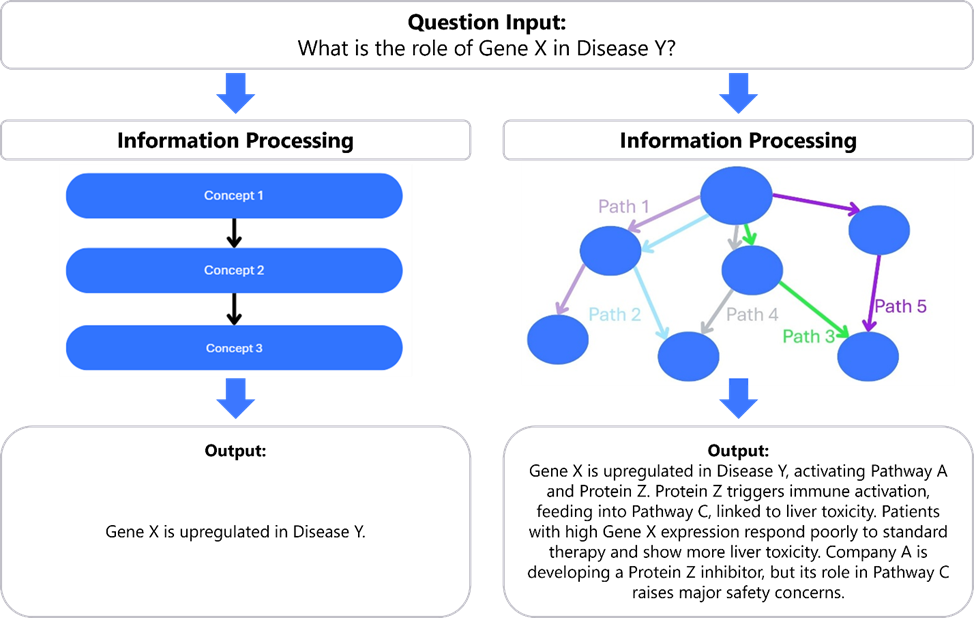

Build models that reason over networks: Networks underpin biology, so our systems must reason over links, not just lines of text. Defensible answers require walking through several connected steps, so-called “multi-hop reasoning”. Modern LLMs are strong at single lookups and fluent summaries, but when multi-hop reasoning is required to respond to a user, they often lose track of constraints, mix cohorts, or skip checks.

At the system layer, perhaps that means moving from “chain-of-thought” to “web-of-thought”: approaches that can traverse multiple paths in a graph at once, check constraints, and reconcile conflicts before proposing an answer.

At the model layer, we might give the model a map of these connections and make those links matter. Instead of treating everything as words in a row, the model should score and select hops (e.g., from a target to its pathways, then to assays and cohorts) and weigh how strong each link is. Along the way, we can encode simple and transparent requirements, so relationships shape the result, not just nearby text. Adopting these principles should enable us to get answers that are traceable, comparable, and reproducible, the qualities high-stakes biotech decisions require.

Figure 3: Chain-of-thought vs. Web-of-thought: Left: a linear “chain-of-thought” compresses evidence into one sentence, ignoring dependencies and hidden constraints. Right: a web-of-thought maps multi-hop links and conditions for action. Biology is a network, not a list, so credible answers require multi-hop reasoning with checks and citations, not a single pass-through text.

Create a user experience that provides validation and feedback loops: Unlike coding LLMs, where feedback is immediate, in biotech the impact of a single choice may not be clear until years later in the clinic or market. Reinforcement learning solved similar challenges through simulation, as in AlphaGo and AlphaZero, but full simulations of biotech investment decisions are not feasible. Adversarial AI, such as digital twin investors that stress-test recommendations, is a possible long-term path but remains speculative. A more immediate approach is to use interaction patterns as training signals. Analysts already perform checks and cross-validations that could be standardized. Systems can learn from explicit corrections as well as implicit behaviors: which trial comparisons are explored or ignored, which analyses are saved or discarded, and how experts navigate between drug mechanisms. In this way, the interface itself becomes validation infrastructure, creating feedback loops that biotech has lacked, offering a new path to make AI trustworthy for guiding high-stakes decisions. Taken together, these principles shift biotech analysis from scattered, manual workflows to something closer to the “single-click diligence” vision. Modernized data pipelines provide standardized, queryable inputs. Network-native models generate multi-hop insights that reflect the interconnected structure of biology, and validation built into the user experience ensures systems continuously refine their domain expertise. The result is not just fluent AI, but trusted decision infrastructure capable of analyzing and supporting the high-stakes choices that define biotech investing and strategic decision making.

As scientists, operators, and investors, we make decisions that demand comprehensive coverage of the data landscape. We need foundational biology, clinical results, regulatory shifts, and more, distilled into clear signals and continuously updated to reflect the dynamic nature of the data. Over the past two years, we have tested existing analytical and AI tools in our workflows and observed firsthand what many across the industry also recognize: generic systems fall short in biotech.

This gap is why we built LABI, Luma Group’s AI for Biotech Intelligence. LABI is an end-to-end platform purpose-built for biotech, designed to deliver comprehensive coverage of all critical data sources, translate that information into validated, traceable, decision-ready insights, and ensure those insights are maintained in real-time as new evidence becomes available.

Our team has been addressing the foundational limitations of applying generic AI to biology by building a biology-native platform. Rather than augmenting existing workflows with off-the-shelf tools, we are designing LABI from the ground up to capture the full complexity of the field.

LABI aggregates, curates, and harmonizes critical data pools, including peer-reviewed manuscripts, patient-level datasets, biorepositories, clinical trial records, regulatory filings, and commercial and financial datasets, spanning millions of sources. This enables LABI to speak the language of biology (graphs, tables, statistical analyses, and more) and to reason in a highly networked, real-time manner, so insights are both comprehensive and accurate.

At its core, LABI makes biology navigable. It organizes genes, proteins, pathways, samples, and outcomes into a connected map, so comparisons are valid. Every answer is anchored in evidence and linked directly back to sources to eliminate the frustration and dangers of hallucinated outputs. Agentic AI workflows continuously scan and cross-check new information, surfacing what has changed, when it changed, and why it matters. The platform incorporates feedback loops so that every interaction strengthens evidence prioritization, sharpens comparisons, and hardens checks, with improvements compounding over time.

LABI is the platform we long wished we had as biotech investors, a platform that helps uncover the most meaningful data and insights in a field defined by complexity and constant change. Making the wrong choice or missing the right one has enormous consequences: missed signals can cost billions, delay decade-long timelines, and ultimately slow the delivery of life-saving medicines. The impact of this challenge extends far beyond capital allocation, touching everyone in healthcare who makes high-stakes strategic choices, from clinical development to corporate strategy. This is a mission we are deeply committed to, and we look forward to sharing more as it evolves.

Acknowledgements

Luma Group would like to acknowledge Manav Kumar for his thoughtful discussion and contributions to this white paper.